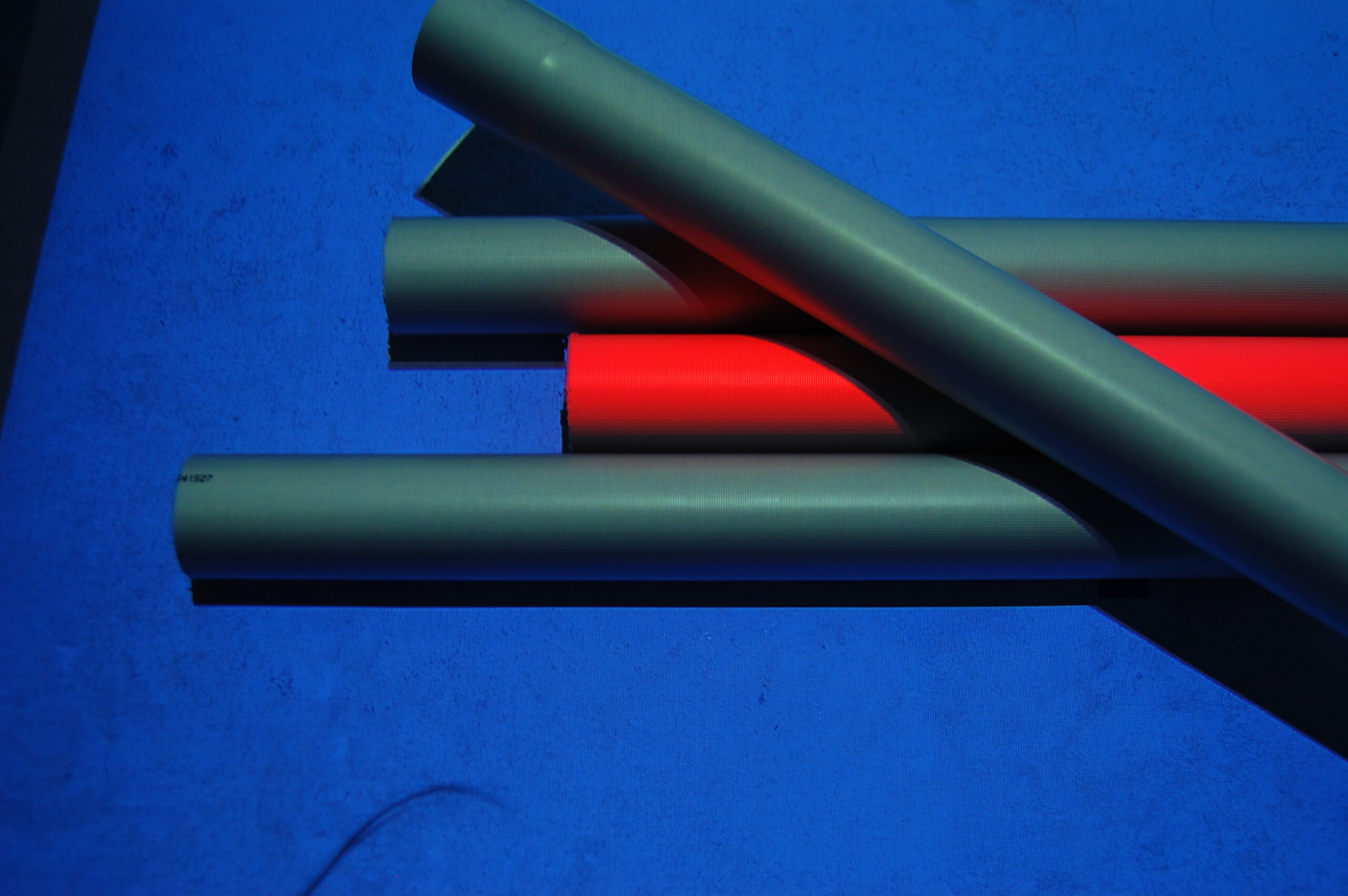

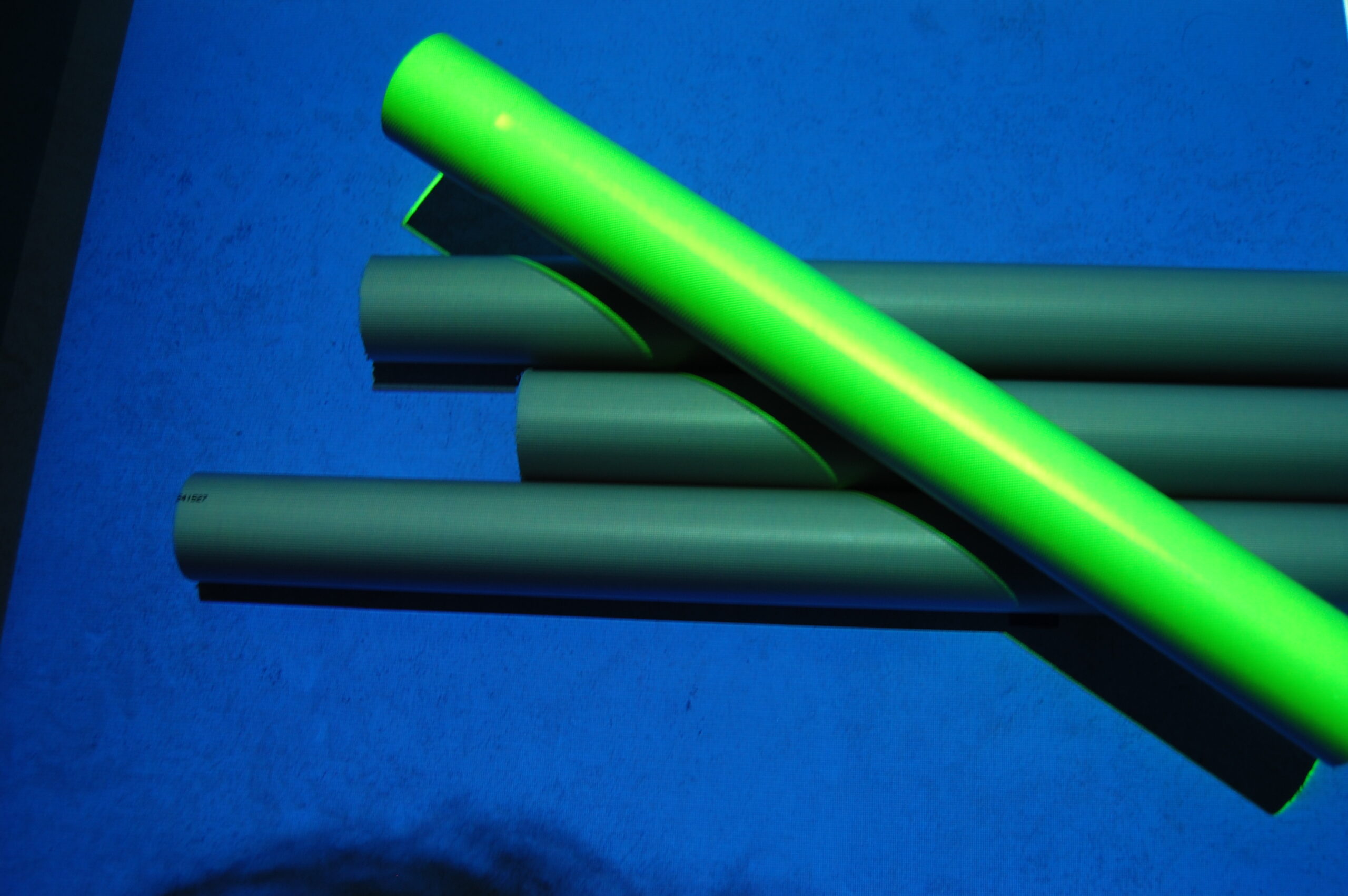

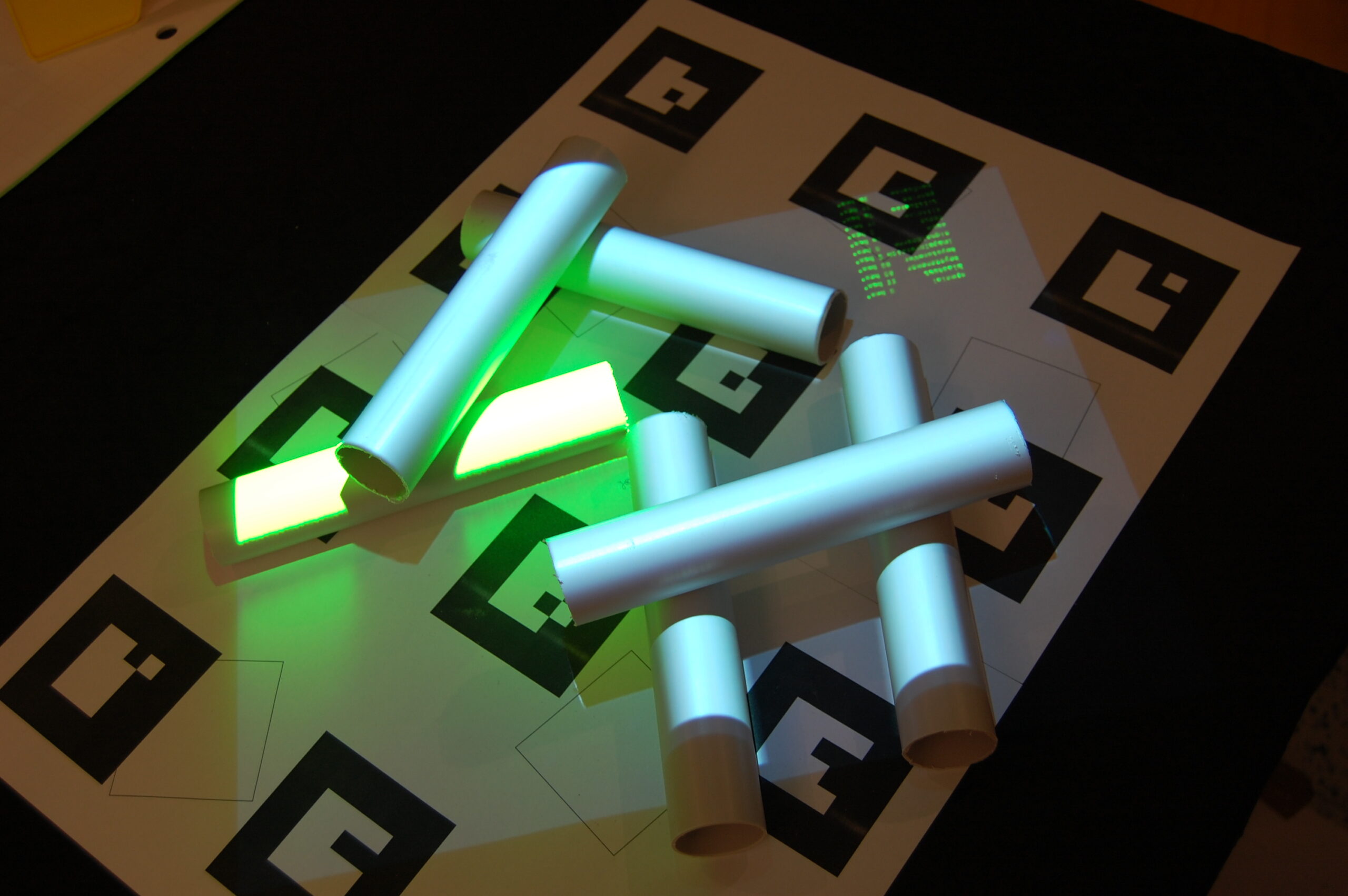

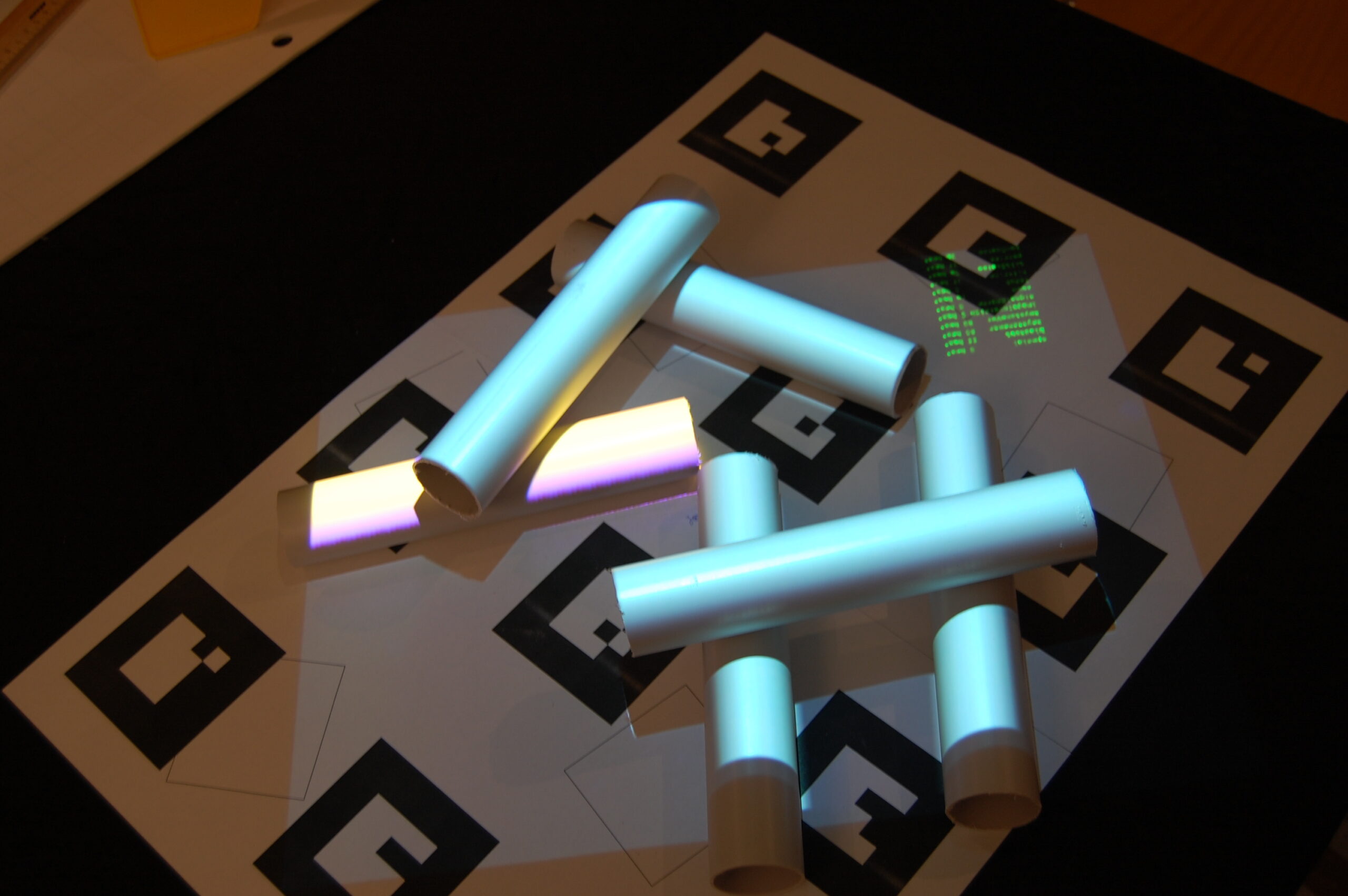

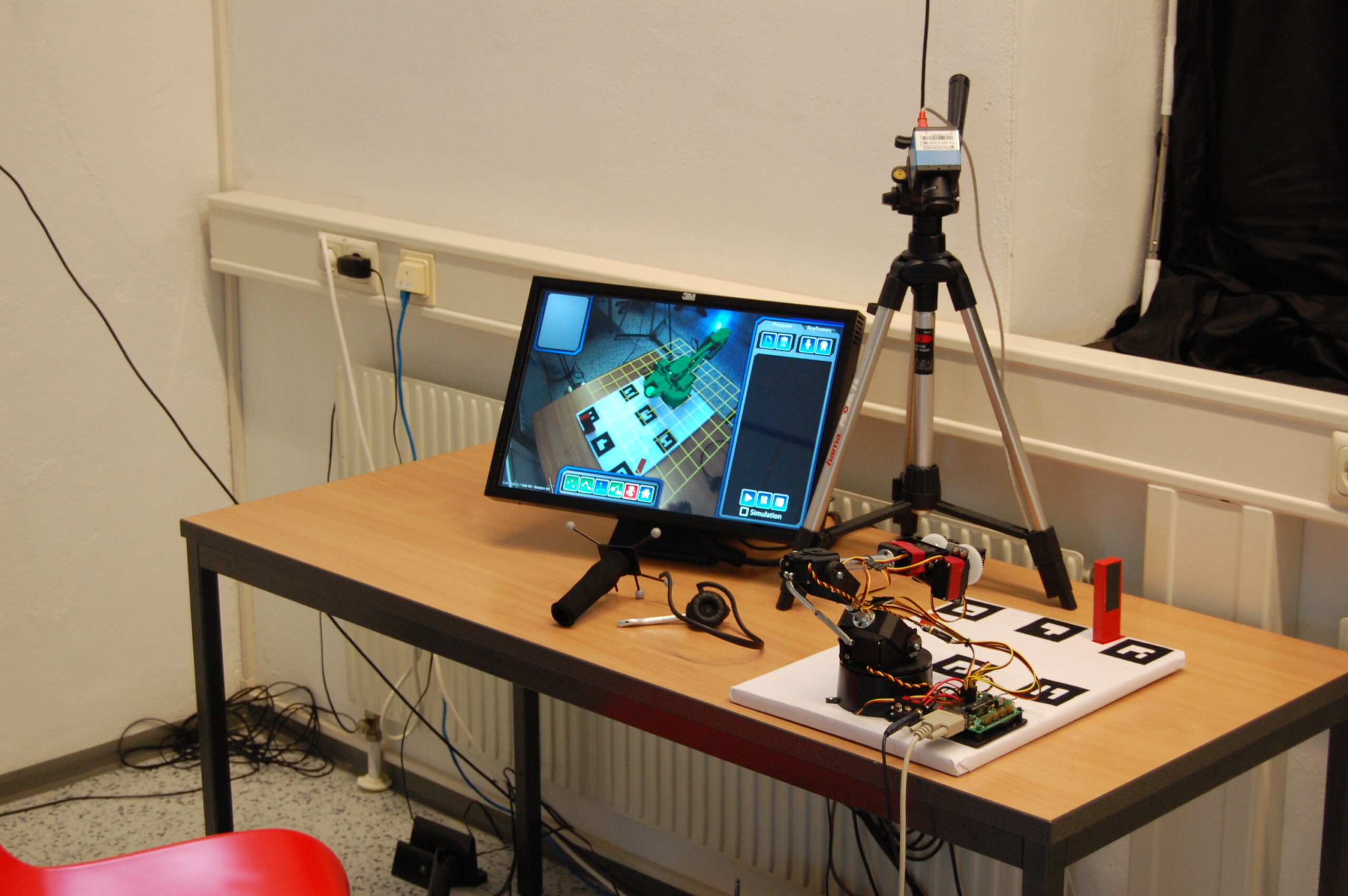

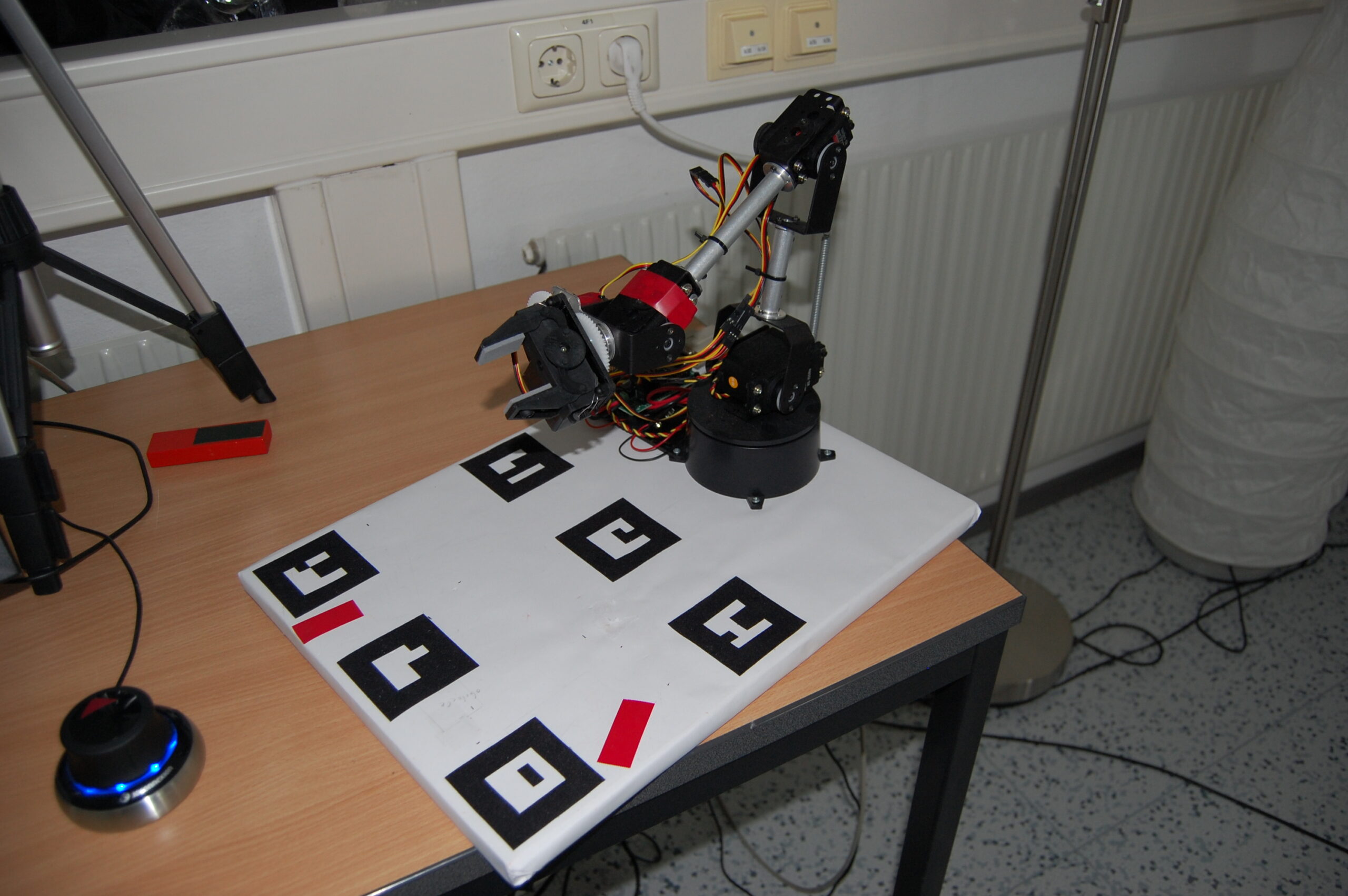

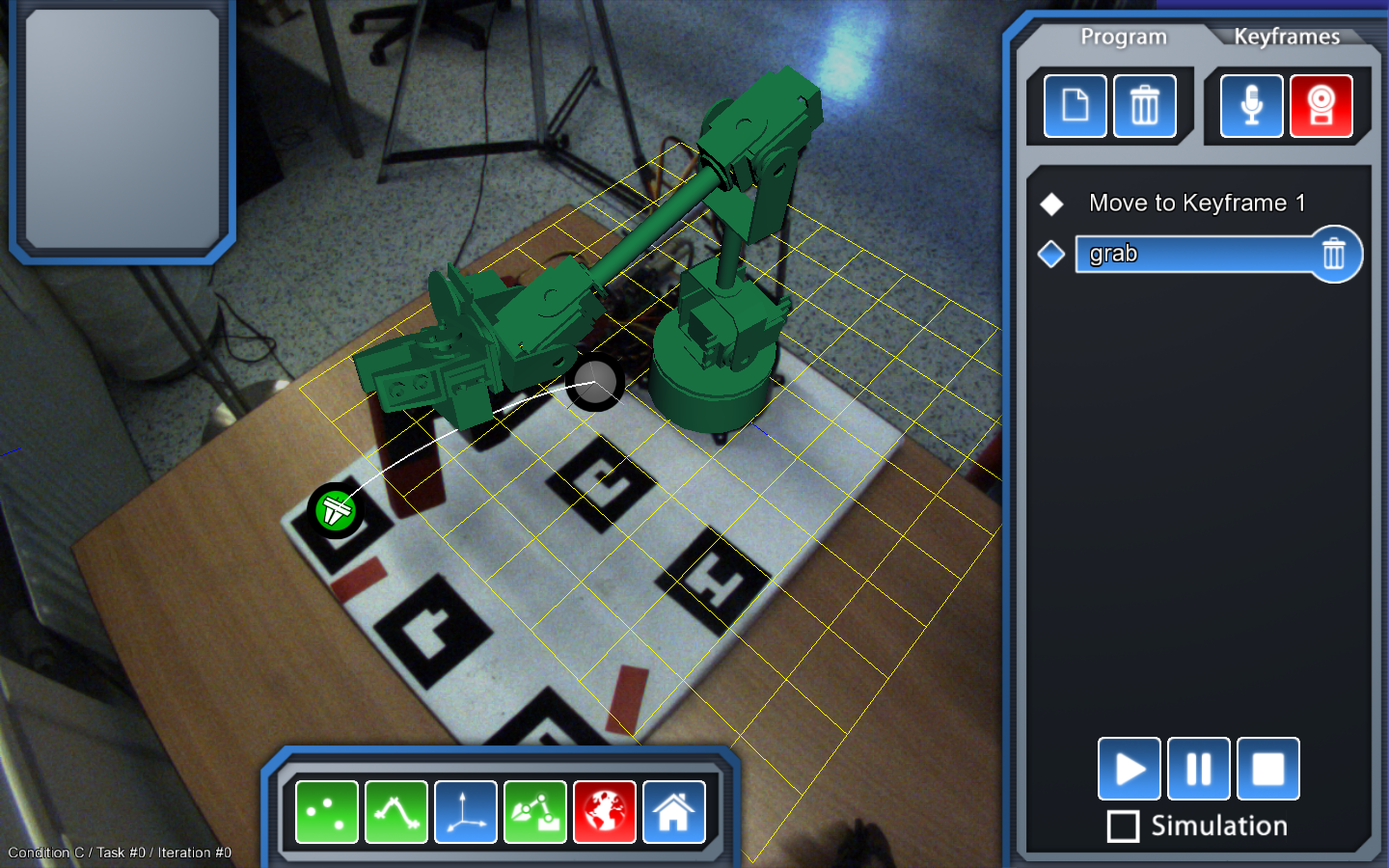

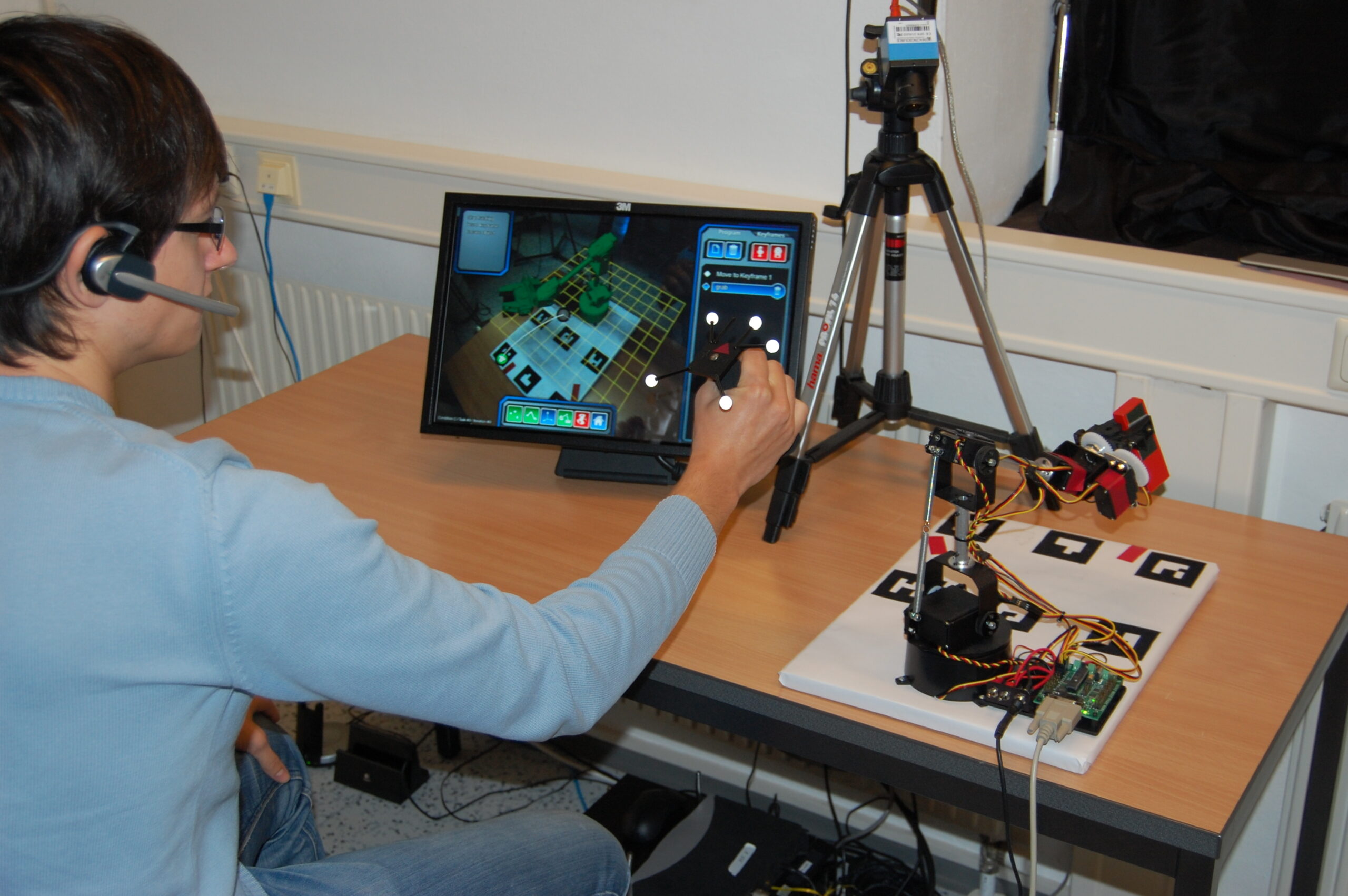

While current systems for controlling industrial robots are very efficient, their programming interfaces are still too complicated, time-consuming, and cumbersome. In this paper, we present AHUMARI, a new human-robot interaction method for controlling and programming a robotic arm. With AHUMARI, operators are using a multi-modal programming operation technique that includes an optically tracked 3D stick, speech input, and an Augmented Reality (AR) visualization. We also implemented a prototype, simulating status-quo Teach Pendant interfaces, as they are commonly used for programming industrial robots. To validate our interaction design, a user study was conducted for gaining both quantitative and qualitative data. Summarizing, the AHUMARI interaction technique was rated superior throughout all aspects. It was found to be easier to learn and to provide better performance in task completion time as well as in positioning accuracy.