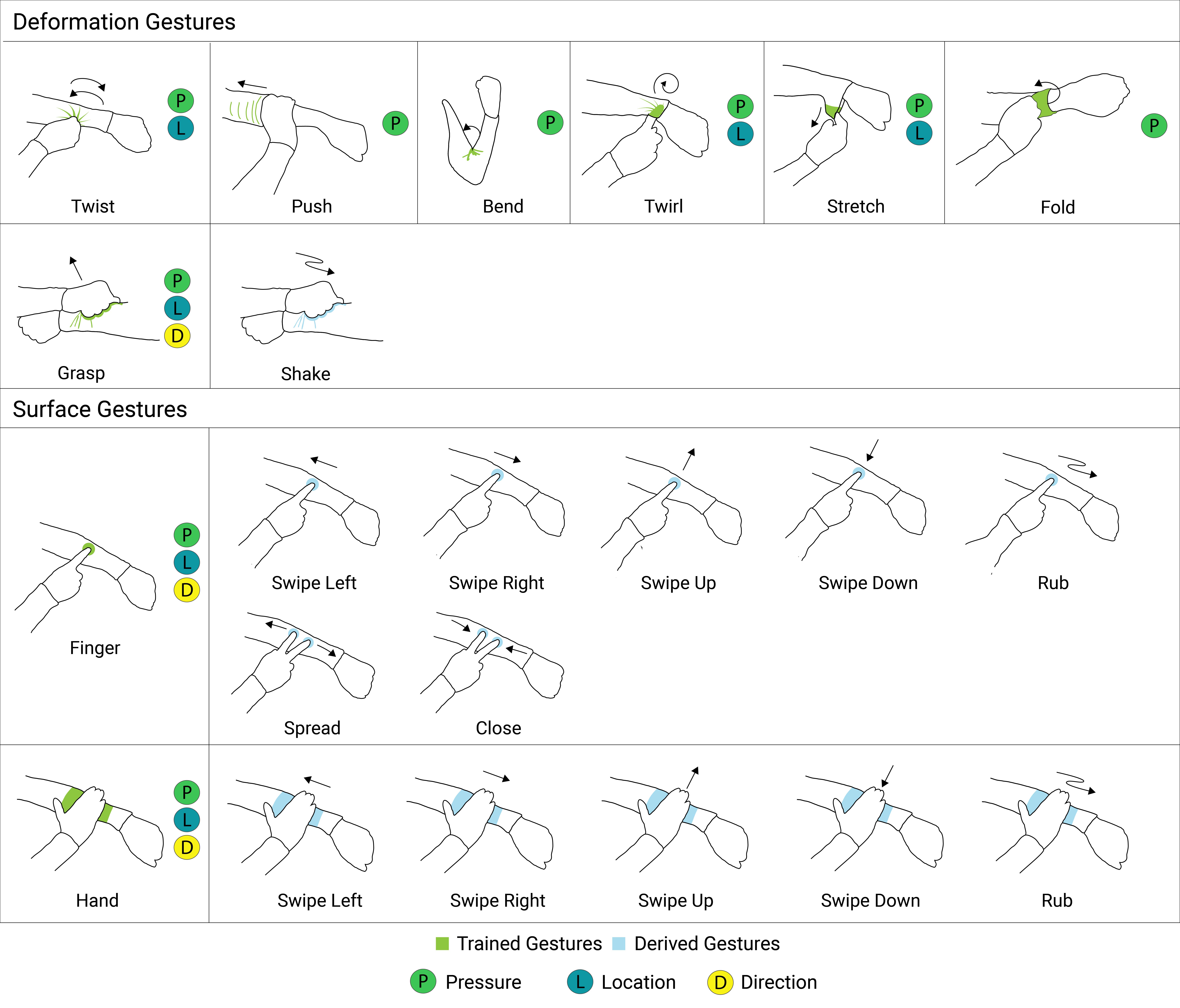

Over the last decades, there have been numerous efforts in wearable computing research to enable interactive textiles. Most work focus, however, on integrating sensors for planar touch gestures, and thus do not fully take advantage of the flexible, deformable and tangible material properties of textile. In this work, we introduce SmartSleeve, a deformable textile sensor, which can sense both surface and deformation gestures in real-time. It expands the gesture vocabulary with a range of expressive interaction techniques, and we explore new opportunities using advanced deformation gestures, such as, Twirl, Twist, Fold, Push and Stretch. We describe our sensor design, hardware implementation and its novel non-rigid connector architecture. We provide a detailed description of our hybrid gesture detection pipeline that uses learning-based algorithms and heuristics to enable real-time gesture detection and tracking. Its modular architecture allows us to derive new gestures through the combination with continuous properties like pressure, location, and direction. Finally, we report on the promising results from our evaluations which demonstrate real-time classification.

Introduction

Computing technologies have become mobile and ubiquitous and, as predicted by Mark Weiser, weave themselves into the fabric of everyday life. While many objects and surfaces have been augmented with interactive capabilities, including smart phones, tabletops, walls, or entire floors, making clothing interactive is still an ongoing challenge. Over the last few decades, a lot of research in wearable computing has focused on integrating sensors into textiles. Most of the existing work in the design space of interactive clothing focuses on surface gestures, planar interactions, such as touch and pressure. Basic deformation has also been shown with stretch and rolling for 1D input.

In this work, we introduce SmartSleeve, a deformable textile sensor, which can sense both touch and deformations in real-time. Our hybrid gesture detection framework uses a learning-based algorithm and heuristics to greatly expand the possible interactions for flexible, pressure-sensitive textile sensors as its unified pipeline senses both 2D surface gestures and more complex 2.5D deformation gestures. Furthermore, its modular architecture allows us to also derive new gestures through the combination with continuous properties like pressure, location, and direction. Thus, our approach allows us to go beyond the touchscreen emulation and basic deformations found in previous work. We particularly emphasize the opportunity to enable both isotonic and isometric/elastic input, as well as, state-changing interaction with integrated passive haptic feedback. This enables us to support a wide range of deformation gestures, such as Bend, Twist, Pinch, Shake, Stretch, and Fold. We further explore the usage of multi-modal input modalities by combining pressure with deformation.

Summarizing, the main contributions of this paper are:

- A hybrid gesture detection pipeline that uses learning-based algorithms and heuristics to enable real-time gesture detection and tracking for flexible textile sensors.

- Two user studies that show the feasibility and accuracy of our gesture detection algorithm.

- A flexible, resistive-pressure textile sensor, with a novel non-rigid connector architecture. We propose a sewn-based connection between the textile sensor and the electronics.

- A set of novel interaction techniques, which arise from the combination of surface gestures, deformation gestures, and continuous parameters (pressure, location, and direction).